A couple of months ago I was able to address some smaller, long-standing issues with the Kentico Community Portal. But, my work on these improvements was different compared to previous updates - I used AI (GitHub Copilot with Claude 3.5 Sonnet) to help me author a large number of the changes!

Below are my experiences and what I learned working with an AI agent in a real-world Xperience by Kentico project,

Before we begin, here are my TLDR; takeaways on using AI for software development.

- Don't just use AI to do the work for you, use it to accelerate you doing the work. Use it to give you more insight, try more things, do the same work faster, and catch more bugs.

- Then, with AI as a tool, you will find yourself doing less of some tasks and more of others. This is normal. Think back on your career (long or short) and remember how you aren't doing the same things today that you did when you first started.

- Current LLMs are non-deterministic—use this to your advantage and generate several variations of a solution. Pick the one you like the most. Don't accept the defaults.

- AI can look at your project like a developer new to your team. Use this to identify places where you can improve context. This could be docs, ADR, comments, structure and organization, tools, etc...

- Use AI to learn new things, because with AI at our fingertips we will be expected to know more not less. But don't just learn facts, learn concepts and perspectives. Software architects are notorious for saying "it depends" because they understand tradeoffs - AI can help you explore and learn these tradeoffs.

- You can even learn things about your own project! Are things structured or named inconsistently? Which package updates led to the most regressions (using Git)? What project dependencies could be areas of concern in the future?

- Share those concerns with the AI to identify improvement opportunities that you could add to a tech-backlog "watch list".

- Ask the AI to challenge your assumptions and poke holes in your ideas. Even if its ideas aren't useful, it will still get you to reflect. Often ending your prompt with "What am I missing?" is more valuable than just giving instructions with the assumption that you know everything already.

Forms design and user experience

The login, registration, and password recovery pages were (quickly) authored right before the Kentico Community Portal was launched in October 2023. I just needed a reliable experience - the design was far less important.

These pages now feature an updated design and improved user experience.

What's important isn't the updated design and UX (it's fine 🤷), but the approach I took to build them.

Although I've been doing web development for more than 15 years, I'm not an expert in design, CSS, or HTML. I can complete most work in this space, but it takes me a lot longer than many other people. That's why I used GitHub Copilot and Claude 3.5 Sonnet to change the markup for me.

If you watch the full video above you'll realize the code generated by Claude doesn't match what you can find on the Kentico Community Portal. I didn't record my initial development, so this is a re-enactment 😉.

In my opinion, this is a feature of the current generation of AI. I can quickly (relative to the amount of time this work would normally take me) iterate over several design variations and then select the one I like most. Once I find a design I like, I manually adjust some of the content, HTML, and CSS classes.

In the end, Copilot didn't do all the work for me. It instead presented me with several options, explained the changes it made each time, and I used my knowledge and experience in web development to select one I felt achieved my goals (the chat prompt).

I don't think of an AI copilot (pun intended) as something that solves the problem for me. Instead, it helps me accomplish things more quickly. I can (and do) still:

Stop and think about the code generated

Look for opportunities to learn something about the changes made by the LLM

Fine-tune the generated code myself to complete the last 5-50% of the work

After improving the login page, I used Copilot to help me update the registration and password recovery experiences. This went smoothly because I already had an example for the AI. This "context" is really important and can make the difference between a frustrating AI experience and a fluid one.

When I first started using AI to help me do various tasks (software development, market research, content proof-reading), I felt frustrated about how important supplying clear and instructions and context was.

It could be the marketing hype around AI didn't match the real-world experiences I had. Another cause could be similar to doing a new exercise or sport - you have to build strength in muscles that you might not have used as much before. I think my "explanation" muscle isn't as good as I'd like to think and I feel like I shouldn't have to use it anyway because AI should just work better.

Improved search

The old search experience allowed visitors to filter their results by different facets (attributes and taxonomy tags), sort the results based on some predefined ordering, and search for specific terms in the discussion question and answers.

Unfortunately, this user experience wasn't great. Due to the large number of taxonomy tags, visitors had to scroll quite far down the page to view all available tag filters. They also needed to use their browser search to find a specific tag.

Additionally, when checking a filter checkbox the page would immediately "jump" when the results were displayed. This didn't happen when sorting the results or searching for a term. It was also difficult to quickly see which taxonomy tag filters were applied to the current search results.

Several large UI changes were made to improve the discoverability and readability of the search filtering and results.

All filtering is now displayed along the left side of the page on desktop and below the search term box on mobile. The "New discussion" CTA was moved to the side, allowing more space for search results above the fold.

Taxonomy tag filters are now contained in their own smaller area of the page with an instant search box to quickly discover and find filters. Once a filter has been checked, the "Search" CTA must now be clicked before the results are updated, making the experience more consistent with the rest of the search UI.

We also created a "Selected topics" section that dynamically updates at the top of the tags list, showing which tag filters are applied for the current results.

An agentic approach to updating search

Similar to the login and registration form updates, I used GitHub Copilot to quickly explore a couple of different search layouts.

I won't show them here, but there were at least 3-4 other rearrangements of the filters, CTAs, and their behaviors that I tried. I think this is a key takeaway! I was able to try several variations of the UX changes in the browser - experiencing the UX and asking myself, "Do I like this? Does this help me search? Does this feel consistent and predictable?"

The version in production today was likely the second one Copilot created, which is to say that I went back to an earlier option after exploring some others, and the agent created the tag search box—I just mentioned in my prompt that the tags made the page very long and were hard to browse.

Before adopting an agentic approach I could have tried maybe 2 different layouts, picked the better one, and spent most of my time refining that layout until it worked well. If I happened to pick the wrong path at the start, I would usually spend a lot of time working it back towards a better solution and think to myself, "It would have been nice to try this early on!" But, I never had enough time to explore more than 2 variations.

It's also worth noting that the variations generated by GitHub Copilot were not just prototypes. They correctly implemented the Alpine.js and HTMX features used for tag search and search form submissions respectively.

Sometimes they required minor fixes or adjustments when I preferred a different naming convention or approach. If I had been better at providing context to the agent I would have added repository level Copilot instructions for these libraries.

What surprised me the most was how adept Copilot was at using these well documented, but significantly less popular libraries and combining them with ASP.NET Core Razor code.

While you might not be able to 1-shot prompt an entire Xperience by Kentico feature and get exactly what you want, you don't have to write React and Next.js to get value out of agentic AI software development tools available today.

The key to agentic tools is managing context!

Some tools make this easier (Cursor) and others have huge payoffs if you learn to master their context (Claude Code), thinking about context will pay off with any modern agentic development tools.

Q&A discussion tagging

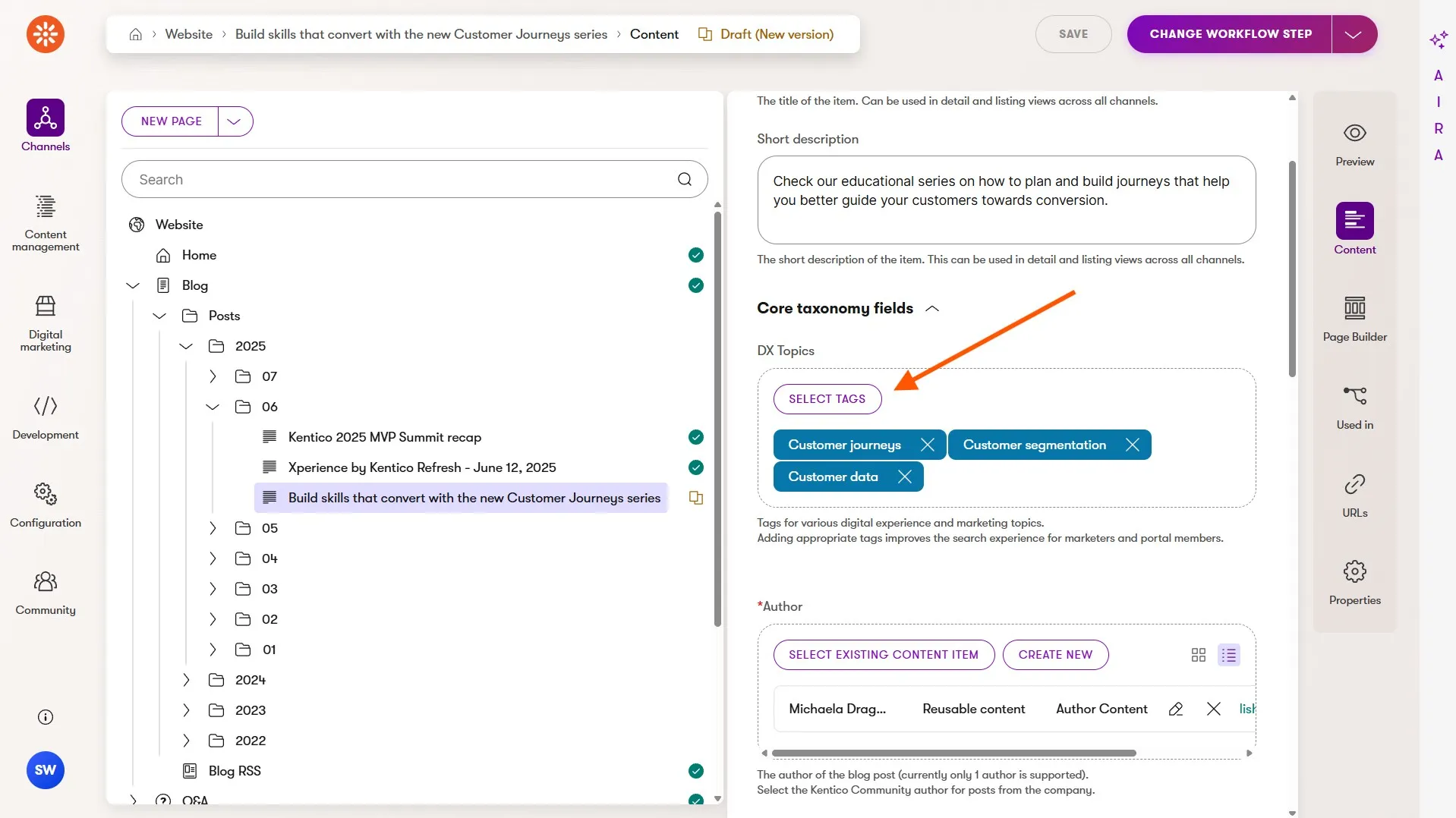

Taxonomy tag filtering for the blog search experience is driven by the tags that blog post authors apply to the posts within Xperience's administration UI.

If we want the tag filtering search experience for Q&A discussions to be useful we need a way for community members to assign tags to their discussions. So far, only blog post auto-generated discussions have had this ability.

Now, members can (and should!) assign tags to Q&A discussions to help drive the search experience. The selectable tags come from the same taxonomy used for blog posts and a variety of other content in the Kentico Community Portal, all based around digital marketing concepts, software development topics, and Xperience by Kentico product features.

The process of creating this tagging experience matched the others covered so far - agentic AI helped me try on several variations, suggested solutions to the challenges and requirements I outlined, and allowed me to try more than I would normally be able to.

The initial design Copilot tried had the tag selection below the markdown editor. I felt this would be easy to miss and I wanted to encourage tag selection to make Q&A discussion search better for everyone. It also used a tabbed design, based on the tag categories, which also made it difficult to see all tags available.

After some prompting the AI agent moved the tagging above the markdown editor, placed it into an accordion and again added the Alpine.js tag search. It also added the client-side business rule validation that member generated discussions should be limited to a maximum of 4 tags.

All of the CSS and semantic HTML to make this UI work correctly with the Kentico Community Portal's Bootstrap 5.3 design foundation was authored by AI. Not only was I able to iterate through a few design variations but the code authoring was significantly faster than I would have been able to accomplish by repeatedly checking Bootstrap docs.

Digital food for thought

Because I already shared my TLDR tips at the beginning of this post, I'll instead close with a reflection I've shared with others.

Upfront vs long term costs

When I started doing a lot of client-side development back in 2014-15 (angular.js and react), I transitioned from my well-understood console.log() debugging approach to using the browser JS debugger. I learned a lot about closures, stack frames, how to name functions to improve the call stack, the JS event loop and timeouts/promises, source maps (😭 they were terrible back then), etc...

It took a lot of extra work to learn all of this and my console.log() debugging was a tool I already had. So, I traded time I could have spent working on delivering features (I was working on a product engineering team at the time) to learn a whole new technology/tool set.

Why?

Because, the app I was building was growing in complexity and was larger than anything I had worked on before. I needed more tools to help me manage that complexity and I knew console.log() would not scale.

Did I completely drop console.log()? No! I still use it 😅 because it's still a useful tool. But, now I have a bigger toolbox!

The learning curve of tools is typically spikey and some (like .NET SOS and WinDbg) are absolutely painful to get started with. AI appears to have its own unique learning curve. I think it's one that's worth learning as long as we remember it's adding to our toolbox, not becoming "the one tool".

By the way, one of my teammates on that front-end project in 2015 had been a front-end developer a lot longer than I had (I came from C#, ASPNET MVC, and Kentico Portal Engine) and he was soo much better at building UIs, components, and even writing JS than I was. However, the entire time he worked on that project he never switched from using console.log() to using the JS debugger.

Many of the more advanced features became my responsibility even though I had less experience because I had a bigger toolbox and slowed down early on so I could speed up later.

Sean Wright

I'm Lead Product Evangelist at Kentico. I'm part of the Product team at Kentico along with David Slavik, Dave Komárek, Debbie Tucek, Martin Králík, and Martina Škantárová. My responsibilities include helping partners, customers, and the entire Kentico community understand the strategy and value of Xperience by Kentico. I'm also responsible for Kentico's Community Programs.